Top 10 machine learning algorithms to learn as a beginner

A hoard of algorithms bombards every person who starts with Machine Learning. And this can quickly get overwhelming for many. Beginners need to feel connected to the subject and get interested in pursuing it further. In this article, I will present ten essential Machine Learning algorithms that every beginner must know.

Linear Regression

The absolute first algorithm taught to beginners is Linear Regression. We define a linear relationship between the input and the output in linear regression. We use linear regression to predict the values in a continuous range. For instance, consider you have the age of people as input and their respective weights as the output as a sample dataset. Using a loss function based on this dataset, we can fit a straight line through the points. The loss function is generally a squared sum of the differences between the actual output and the predicted output by the model. We use this loss function to determine the best fit line through the dataset. Now, using this line, we can further predict the weights of new people based on their ages.

Logistic Regression

Although the name contains the word “regression,” we use it for predicting discrete values (mostly binary classification). We use a logit function in the last step. We apply linear regression as usual but then pass it through a function that maps the continuous value to a specific discrete value. This way, we can classify the input into one particular discrete output. For example, consider the sigmoid function. Consider the value 0.5 as the differentiating point. Thus, all the input values which give an outcome greater than 0.5 after passing the linear regression output through the sigmoid function are considered class “1”. Similarly, we can regard input values that give results less than 0.5 as the category “0”.

Decision Trees

Decision Trees form one of the most efficient and logical methods for classification tasks. We construct the “tree” so that the internal nodes contain the tests on the parameters. The results of these branches form the edges of the tree. And the leaves represent the output labels. Therefore, given a specific set of input values for each feature, we can successfully trace our path down the tree from the root to the leaves to determine a unique classification label. We construct the tree using the idea of “recursive partitioning.” We split or “partition” our dataset into subsets based on the values of features. The model learns specific relations among these feature subsets and combines them “recursively” to build the tree. We measure the efficiency of a Decision Tree using the Gini Index.

Support Vector Machine

Support Vector Machines (SVMs) are another effective tool in classification tasks. First, we spread out the whole source dataset in an n-dimensional plane. We tie the output label of the input data to its own cartesian coordinate. In doing so, we naturally differentiate the entire dataset into different classes. Now comes the idea of “support vectors.” So, placing a single boundary line differentiating the categories can become tricky. Since specific points of either class can lie very close to the boundary line, it can become slightly difficult to consider them in either type. Doing so can confuse the model to predict a new data point further. Therefore, we construct two unique vectors on either side of the boundary known as “support vectors.” Any data point between those two vectors remains unclassified, and we categorize all other points into the respective class.

Naive Bayes Classifier

As the name suggests, the Naive Bayes Classifier uses Bayes’ Theorem to decide the classification probability. Bayes’ Theorem determines the likelihood of the occurrence of an event based on some previously occurred event. Therefore, given the input features, it finds out the probability of occurrence of each classification label given those specific input features. Now the reason it is called “Naive” is that it considers the input features are entirely unrelated. Thus, this forms its most significant drawback, as this model cannot learn relationships between features. It is a high-speed Machine learning technique that sometimes outperforms even the most tricky models. It highly finds its uses in text classification problems, sentiment analysis, and other related tasks.

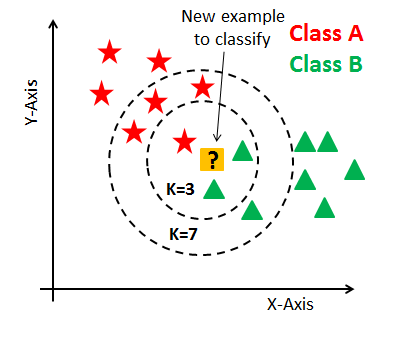

KNN algorithm

The KNN or K Nearest Neighbors Algorithm uses the idea of spatial similarity. As the name suggests, we first plot the dataset in the cartesian plane (n-dimensional). For any data point, we consider “k” of its nearest neighbors (including itself). Euclidean distance determines the distance between two points on the plane. Now, based on the majority labels (we specify the tiebreaker rules), we assign that majority label to the current data point. This idea determines the classification label for a new unknown data point. The KNN is a “lazy learner” method as it does not learn anything from the dataset. Instead, whenever we need to classify any element, it uses this stored dataset to perform predictions. Although this algorithm is relatively simple, the “K” can often be tricky to determine. Also, computing the K nearest neighbors and their distances for each classification is expensive.

Random Forest

As we saw in decision trees, we construct a tree and obtain one classification label based on input features. Random Forest algorithm, as you might infer, combines multiple decision trees and takes the average of the labels assigned by them to decide the final label for the input. We split the dataset into random subsets and construct multiple decision trees for this subset. Then, we obtain the predictions for this subset after passing these data points through the built decision trees. We get an average for this subset based on the output classification labels submitted by those decision trees, forming the final label for this subset. We repeat these steps for random subsets. Thus, we ensure the robustness of the model. The more trees we build leads to greater accuracy and helps reduce the issue of overfitting.

Principal Component Analysis

Principal Component Analysis (PCA) comes from a family of algorithms following “dimensionality reduction.” What exactly is this, you may ask? Imagine some data point that is plotted in a higher dimension than 3D. In this case, it becomes challenging to visualize its location and how it behaves with other data points, right? Now, if we find some correlated feature groups among all the features. So is it possible to bring down the overall dimension of this input by somehow combining the correlated feature groups? This forms the general idea of PCA. You combine the correlated feature groups in the original input to generate all uncorrelated features in the outcome. These unique features developed are called “principal components.” Doing so reduces the dimension of the input, which is why it’s a part of the dimensionality reduction algorithm.

AdaBoost Algorithm

What is Boosting? Boosting is the process where we combine outputs of weak learning algorithms, take their average or make them improve on each other, and in the end, generate a robust learning algorithm. We utilize this practice since we obtain different outlooks on the same dataset using different models. AdaBoost, also known as Adaptive Boosting, is a widespread technique in Machine Learning using Decision Trees. We use Decision Trees with only one split in this algorithm. AdaBoost is the most popular boosting algorithm out there, and it is one that beginners must know among the boosting algorithms.

Artificial Neural Network

This algorithm resembles the human neural system. Artificial Neural networks or ANN is the beginning algorithm for understanding the connection between the human nervous system and its influence on Deep Learning algorithms. Thus this forms the foundation for any beginner to take on Neural Networks. It uses the concepts of weights and biases for each layer, and the outputs of one layer proceed as the inputs of the successive layers. Take a look at the example below for more clarity:

Conclusion

This article is for beginners and everyone who wants to cover their base in Machine Learning. It is a starting point for beginners to find their footing in this relatively new field. It is up to you to study and go in-depth for each of these methods. Practice as much as you can and code even more because that will establish a firm grip on those topics. Happy Coding!

Sharing is caring

Did you like what Sanchet Sandesh Nagarnaik wrote? Thank them for their work by sharing it on social media.